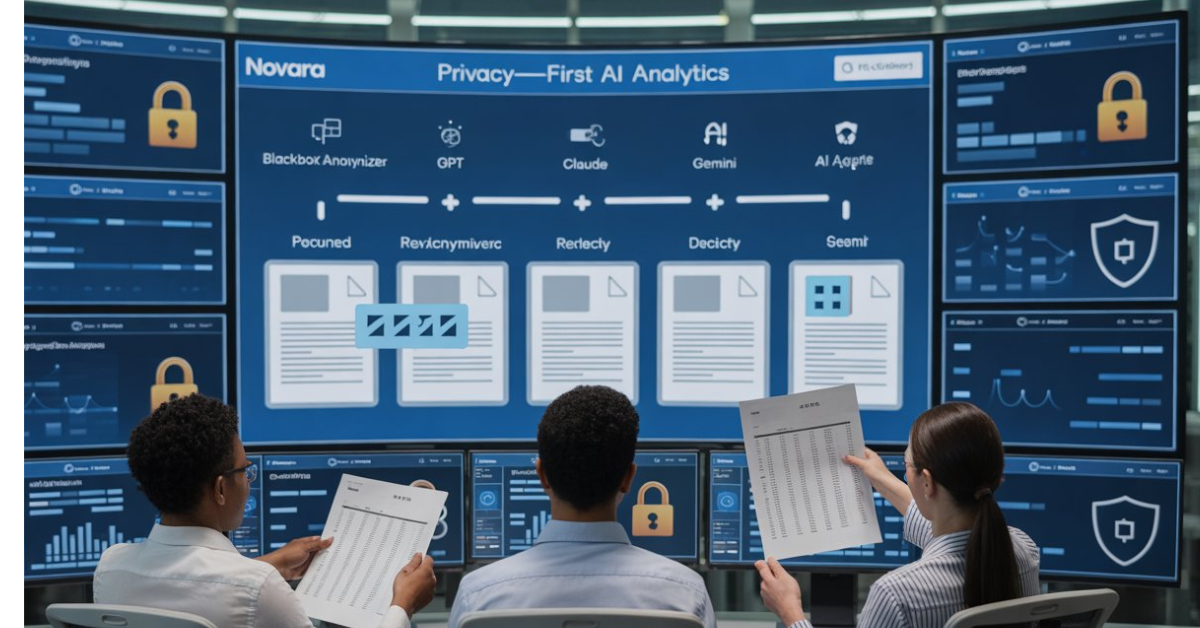

Privacy-first AI analytics is moving from a nice-to-have to a hard requirement as businesses rush to plug AI into their most sensitive documents. With Novara, Questa Safe AI is rolling out a privacy-focused AI assistant that anonymizes business files locally before any model—GPT, Claude, Gemini, or custom agents—can touch them. For US organizations facing rising regulatory pressure and real-world breach risks, this privacy-by-design approach promises AI-powered productivity without handing raw data to external providers.

Novara’s launch: what just changed?

Questa Safe AI has introduced Novara, a privacy-first AI analytics assistant built to help teams query and analyze everyday business files without exposing sensitive information to model training or third-party platforms. At the core is a “Blackbox” anonymizer that runs locally—either on an enterprise server or in a dedicated cloud account—so only sanitized data ever reaches AI models.

This launch lands at a moment when AI is embedded into finance, sales, strategy, and operations, but many tools still log prompts, retain histories, or reuse data to retrain models by default. As Professor John MacIntyre notes, AI privacy is no longer an abstract academic concern; it is a real-world challenge for individuals and small companies alike.

How Novara’s privacy-first AI analytics works

Questa’s architecture inserts a dedicated anonymization layer between user documents and any downstream AI model. The Blackbox engine automatically detects and replaces personal and business‑critical details before files are ingested by GPT, Claude, Gemini, or other agentic AI services.

Users can deploy the platform on-premise or in an exclusive cloud account, so raw files remain inside their own controlled infrastructure. Security analysts would describe this as an “AI firewall” pattern: no third-party API calls before redaction, no prompt logging, no training on user data, and a two‑layer model specifically designed to remove sensitive information locally.

From spreadsheets to insights in minutes

Once anonymization is complete, Novara lets users ask natural‑language questions of their Excel, Word, and PDF documents and receive structured, context‑aware answers. Financial analysts, sales teams, and strategy leaders can generate summaries, comparisons, and custom reports in minutes instead of spending days on manual Excel modelling.

A key design choice is model flexibility: Novara is LLM‑agnostic, so teams can route different tasks to different models without getting locked into a single provider. Industry observers note that this lets enterprises benchmark model strengths—say, one model for numerical reasoning and another for summarization—while keeping the same privacy-first guardrails around their data.

Why this matters for AI in business

For businesses, privacy-first AI analytics is less about marketing and more about operational risk. By guaranteeing that sensitive fields are stripped out locally, Novara allows more teams to adopt AI for tasks like board reporting, customer feedback analysis, and M&A research without breaching internal policies or regulatory rules.

-

It unlocks AI for highly regulated workflows, including finance and healthcare, where data residency and consent are non‑negotiable.

-

It supports internal governance because leaders can point to concrete controls: no prompt logging, no model training on customer data, and auditable anonymization.

-

It supports vendor diversification, since companies can safely test multiple AI services behind the same anonymization layer.

Cybersecurity and compliance implications

From a cybersecurity perspective, Novara’s approach reduces the attack surface created when sensitive data is shipped to third‑party AI endpoints. Anonymization before transmission limits what an external breach or misconfiguration at an AI provider could expose, because the provider never sees raw identifiers in the first place.

For US enterprises facing tightening privacy and AI governance expectations, privacy-first AI analytics also aligns with security best practices such as data minimization and least privilege. The option for on‑premise deployment and national data residency helps organizations meet sector‑specific requirements in finance, healthcare, and critical infrastructure. Security teams can treat Novara like a specialized redaction gateway, integrating it into broader zero‑trust and data‑loss‑prevention strategies.

What’s next: privacy as a competitive edge

Looking ahead, privacy-first AI analytics is likely to become a competitive differentiator, not just a compliance checkbox. Organizations that can safely analyze sensitive contracts, patient records, or transactional logs with AI will move faster than peers stuck in manual-only workflows.

However, the model depends on the robustness of the anonymization layer: if redaction misses edge‑case identifiers or is poorly configured, residual risk remains. Industry observers expect more standardized testing, benchmarks, and third‑party audits for anonymization engines like Questa’s Blackbox, especially in high‑stakes domains. For now, Novara signals an important shift toward treating privacy-first AI analytics as default infrastructure, much like firewalls and VPNs became standard in earlier eras of enterprise IT.

Key Takeaways

-

Privacy-first AI analytics lets companies tap AI for sensitive documents while keeping raw data on-premise or in a private cloud.

-

Novara’s Blackbox anonymizer acts like an “AI firewall,” stripping out critical details before any model access, reducing data training and leakage risks.

-

Flexible LLM selection allows enterprises to optimize accuracy and cost without sacrificing privacy or locking into a single AI vendor.

-

For cybersecurity, local anonymization narrows the blast radius of potential breaches and aligns with zero‑trust, data minimization, and compliance requirements.

-

Sectors handling financial, health, or other regulated data can adopt AI analytics more broadly when privacy-by-design tools like Novara are part of the stack.

Related News (Must Read)

Why Small European Businesses AI Adoption Explodes Now

References Section

-

EIN Presswire – “Questa launches a new privacy focused AI Assistant to get insights from business files without any AI Training on them”

-

Questa AI – Main product site https://www.questa-ai.com

-

Questa Solutions – Privacy Protected AI overview https://questa.solutions/ourportfolio/privacy-protected-ai

-

Questa AI – Platform overview https://questa.solutions

-

“Under the Hood: Building a Privacy-First Anonymizer for LLMs” (Questa Data Science team)

-

F6S company profile – Questa AI https://www.f6s.com/company/questa-ai