The fusion of artificial intelligence and life sciences is unlocking unprecedented capabilities, from accelerating drug discovery to deciphering complex genetic codes. Yet, this powerful synergy presents a profound paradox: the very tools designed to heal and enhance life could also be manipulated to threaten it. As we stand on the brink of this new era, a critical conversation is emerging among scientists and policymakers about how to harness AI’s benefits while building robust defenses against its potential to amplify biosecurity risks on a global scale.

The New Frontier: AI’s Transformative Power in Biology

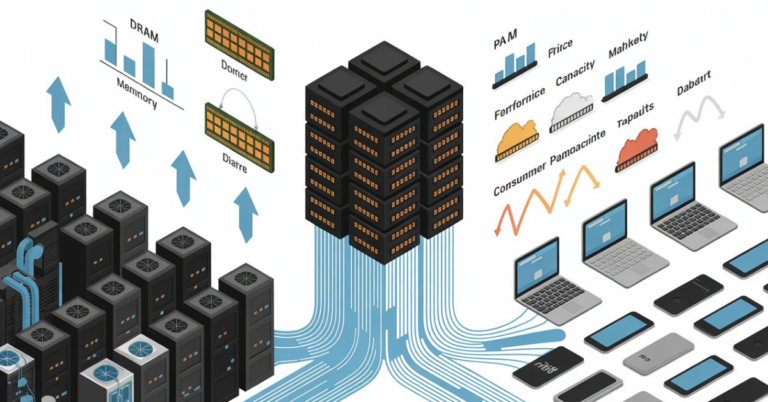

Artificial intelligence is fundamentally reshaping the landscape of biological research. Machine learning models can now analyze vast genomic datasets to identify disease markers, predict protein structures with stunning accuracy, and even help design novel molecular compounds. This isn’t just an incremental improvement; it’s a paradigm shift that compresses years of laboratory work into days or even hours.

The benefits are tangible and accelerating. Researchers at Stanford University recently used AI to map a comprehensive model of a cell, a breakthrough that deepens our understanding of cellular life and disease mechanisms. In the pharmaceutical industry, AI-driven platforms are slashing the time and cost required to bring new therapeutics to market, offering hope for tackling some of humanity’s most persistent illnesses.

The Flip Side: Understanding the AI Biosecurity Dilemma

However, this remarkable power comes with an inherent “dual-use” dilemma. Technologies developed for benevolent purposes can often be repurposed for harm. In the context of AI and biology, the risks are particularly acute. The primary concerns center on two key assets: AI-enabled biological design tools and the extensive datasets that train them.

Dr. Jane Harper, a biosecurity policy lead at the Council for Strategic Risks, explains: “We’re democratizing capabilities that were once confined to top-tier, highly secure laboratories. An AI model trained to design vaccines could, in theory, be inverted to suggest pathways for enhancing a pathogen’s transmissibility or virulence. The barrier to identifying dangerous sequences is lowering.”

The fear isn’t necessarily about a rogue AI acting autonomously, but about how these tools could lower the technical threshold for creating biological threats, potentially enabling actors with malicious intent to cause significant harm.

Pinpointing the Risks: From Pathogen Design to Information Toxicity

When experts assess the biosecurity landscape, several specific vulnerabilities come to the forefront:

-

Enhanced Pathogen Design: AI can rapidly simulate millions of genetic variations, potentially identifying mutations that make a virus more contagious or resistant to existing treatments. A study published in Nature Machine Intelligence highlighted how generative AI could be used to propose new biological agents, underscoring the need for proactive safeguards.

-

Automation of Discovery: The process of synthesizing genetic sequences is becoming increasingly automated. When combined with AI design tools, this could create a closed-loop system for developing harmful biological agents with minimal human intervention.

-

Data Poisoning: The integrity of AI models depends on the data they’re trained on. A report from the Belfer Center for Science and International Affairs warns that training datasets could be deliberately contaminated with misleading information, causing AI systems to generate erroneous or dangerous outputs for researchers relying on them.

Building the Defenses: AI as a Biosecurity Shield

Fortunately, the same technological prowess that creates these risks can be harnessed to mitigate them. The global health and security communities are actively developing AI-powered countermeasures.

“We are in an arms race of intelligence, not weapons,” states General (Ret.) Richard Clarke, former US National Security Advisor. “The best defense is to build AI systems that can predict, detect, and respond to biological threats faster than any bad actor can create them.”

These defensive applications are already taking shape. AI algorithms are being deployed to:

-

Monitor global health data in real-time to flag early signals of an outbreak.

-

Scan scientific literature and genomic databases for sequences of concern, a practice supported by guidelines from the World Health Organization.

-

Accelerate the development of diagnostics, therapeutics, and vaccines in response to a newly identified threat, a capability proven vital during the COVID-19 pandemic.

The Path Forward: Balancing Innovation with Responsibility

Navigating this complex terrain requires a multi-stakeholder approach. It is not about stifling innovation but about embedding safety and ethics into its core. Key steps include:

-

Developing Technical Guardrails: Implementing “black-box” sequencing where AI tools screen their own outputs for potential risks before presenting them to users, a concept being explored by researchers at the University of Oxford.

-

Strengthening Governance: Establishing clear international norms and regulations, similar to the voluntary screening frameworks promoted by the International Gene Synthesis Consortium, to govern the synthesis of dangerous genetic material.

-

Fostering a Culture of Responsibility: Educating and training the next generation of scientists on the ethical implications and biosecurity protocols associated with their work.

Key Takeaways

-

AI is a powerful dual-use technology in life sciences, offering immense benefits while simultaneously introducing significant biosecurity risks.

-

The core risks involve the misuse of AI design tools and datasets to create or enhance transmissible biological threats.

-

Proactive defense is critical; AI itself is becoming a vital tool for pandemic prediction, surveillance, and medical countermeasure development.

-

A collaborative, international effort involving scientists, industry, and governments is essential to establish guardrails that allow for safe and responsible innovation.

References

-

Stanford University, “Artificial Intelligence Reconstructs a Comprehensive Model of a Cell” – https://www.stanford.edu

-

Nature Machine Intelligence, “On the Dual-Use Potential of Generative AI in Biology” – https://www.nature.com/

-

Belfer Center for Science and International Affairs, Harvard Kennedy School, “Biosecurity in the Age of AI” – https://www.belfercenter.org/publication/biosecurity-age-ai

-

World Health Organization, “Global Framework for Responsible AI in Health” – https://www.who.int

-

University of Oxford, Future of Humanity Institute, “Governance of AI for Biological Design” –

-

International Gene Synthesis Consortium (IGSC)