AI existential safety index scores are sending a clear warning about how unprepared major labs are for their own technologies. Recent grades for companies like Meta, DeepSeek, and Xai show weak planning around catastrophic and existential risks, even as they sprint toward more powerful frontier models. Regulators in places like California are starting to respond with new transparency and risk‑disclosure rules, but the gap between capability and governance is still wide. The real question is no longer whether AI can trigger systemic harm, but whether institutions can mature fast enough to keep up.

Key Takeaways

-

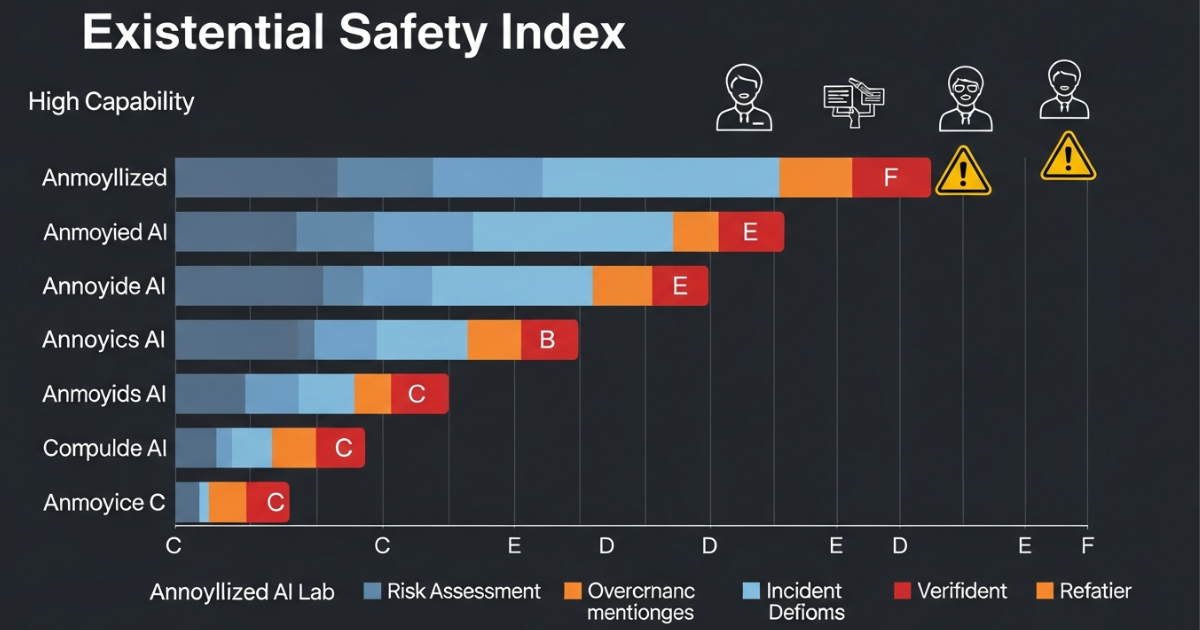

The latest AI existential safety index shows most leading labs scoring in the D–F range on existential risk preparedness.

-

Labs that talk openly about superintelligence often have no concrete, testable plans for containing catastrophic model failures.

-

Emerging regulation is moving from voluntary “principles” to enforceable rules and audits for frontier models.

-

Experts argue for an “FDA for AI” model: licensing, pre‑deployment evaluations, and ongoing monitoring as capabilities scale.

-

Existential risks grow out of today’s real harms—cyberattacks, manipulation, and over‑reliance on AI—rather than science‑fiction scenarios.

Why the AI Existential Safety Index Matters

The AI existential safety index produced by civil-society groups like the Future of Life Institute evaluates whether leading labs have the governance, engineering, and culture needed to manage catastrophic AI risks. Across dimensions such as safety frameworks, red‑teaming, model governance, and public transparency, most firms struggle to earn even mid‑tier grades, and existential safety scores cluster at the bottom.

In practice, companies capable of training models with dangerous capabilities still lack robust playbooks for shutdown, incident response, and long‑tail risk analysis. “You would not certify a nuclear plant with a sketchy evacuation plan, yet we are handing frontier AI systems global reach with little more than aspirational blog posts,” argues Dr. Lena Khatri, an AI policy researcher at the University of Toronto.

What “Existential Safety” Really Covers

Existential AI risk is any trajectory where AI systems undermine humanity’s long‑term potential—through loss of control, large‑scale accidents, or cascading social and economic failures. Near‑term issues such as autonomous weapons, critical‑infrastructure access, and high‑leverage cyber tools are treated as risk factors that raise the odds of severe scenarios later.

Research across law, health, and international governance stresses that existential risk emerges from today’s deployments: opaque models, weak verification, and the concentration of powerful systems in a few private actors. “The technical curve is exponential, but institutional learning is still linear,” notes Dr. Miguel Sørensen, lead analyst at the Global Risk Futures Lab. “That structural mismatch is at the heart of existential AI risk.”

Why Big Labs Are Scoring So Poorly

Most major AI labs now publish safety principles, but independent evaluations show a large gap between principles and enforceable practice. Governance researchers argue that firms over‑index on glossy ethics statements while under‑investing in binding internal controls, independent oversight, and rigorous worst‑case testing.

Competitive pressure intensifies the problem: releasing new models faster than rivals often wins more market share than quietly delaying for deeper safety work. “As long as frontier labs are rewarded primarily for capability milestones, safety teams will be playing catch‑up inside their own companies,” says Sara Goldstein, CEO of the AI risk consultancy Sentinel Systems.

From Principles to Rules: The New Regulatory Wave

Policy thinkers are converging on a frontier AI regulation model that targets the most capable general‑purpose systems and the entities that build them. Proposals from industry and academia include mandatory safety evaluations, registration of high‑risk training runs, incident reporting, and escalating obligations as compute and capability thresholds are crossed.

California’s recent work is an early example: it emphasizes public transparency on catastrophic risks, mitigation plans, and security controls around frontier models. Legal scholars increasingly argue that states have a positive obligation under human‑rights law to regulate AI proactively when plausible existential threats are involved.

Why an “FDA for AI” Is Gaining Traction

The “FDA for AI” analogy captures a simple idea: before deploying powerful AI systems at scale, companies should have to prove a reasonable level of safety to an expert authority. In practice, that could include licensing for frontier labs, pre‑deployment testing standards, post‑market surveillance of real‑world harms, and the power to suspend or recall unsafe systems.

The UN and national advisory bodies are already exploring verification regimes and technical audits for advanced models. “Without independent checks on capabilities, data pipelines, and security, existential safety is just a branding exercise,” argues Dr. Aisha Rahman, a senior advisor to a UN AI verification working group.

How Labs Can Improve Failing Scores

Turning a failing AI existential safety index score into a strength requires board‑level accountability, clear risk thresholds where development pauses automatically, and tight integration of safety metrics into product decisions. Labs also need much more transparency—publishing evaluations, incident post‑mortems, and whistleblower protections so external researchers can pressure‑test claims.

Strategically, existential risk should be treated as an extension of current harms rather than a distant thought experiment. As Sørensen puts it, “The labs that treat existential safety as a design constraint, not a PR cost, will define who actually gets to build the future.

References

-

Existential Risks & Global Governance Issues Around AI & Robotics – SSRN

https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4174399 -

Potential for near-term AI risks to evolve into existential threats – BMJ / NIH

https://pmc.ncbi.nlm.nih.gov/articles/PMC12035420/ -

The International Obligation to Regulate Artificial Intelligence – Michigan Journal of International Law

https://repository.law.umich.edu/cgi/viewcontent.cgi?article=2169&context=mjil -

From Principles to Rules: A Regulatory Approach for Frontier AI – Centre for the Governance of AI

https://www.governance.ai/research-paper/from-principles-to-rules-a-regulatory-approach-for-frontier-ai -

Frontier AI Regulation: Managing Emerging Risks to Public Safety – OpenAI

https://openai.com/index/frontier-ai-regulation/ -

California Frontier AI Working Group Report on Frontier Models

https://www.cafrontieraigov.org -

The 2025 AI Index Report – Stanford HAI

https://hai.stanford.edu/ai-index/2025-ai-index-report